在Android SDK中的ApiDemos內有sensor.java的程式,該程式是在教我們如何使用SensorManager來讀取感測器的資料,然而在模擬器上郤無法摸擬感測器的動作,本篇文章嘗試把SensorManager換成SensorManagerSimulator,其步驟如下:

1. 先建立一個Sensors的專案

package com.example.sensor;

import android.app.Activity;

import android.os.Bundle;

public class Sensors extends Activity {

/** Called when the activity is first created. */

@Override

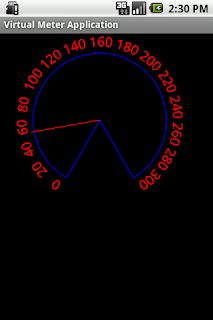

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main);

}

}

2. 將ApiDemos放在os下的Sensor.java程式剪貼到sensors的專案,程式如下:

package com.example.sensor;

import android.app.Activity;

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.Canvas;

import android.graphics.Color;

import android.graphics.Paint;

import android.graphics.Path;

import android.graphics.RectF;

import android.hardware.SensorListener;

import android.hardware.SensorManager;

import android.os.Bundle;

import android.view.View;

public class Sensors extends Activity {

/** Called when the activity is first created. */

/** Tag string for our debug logs */

private static final String TAG = "Sensors";

private SensorManager mSensorManager;

private GraphView mGraphView;

private class GraphView extends View implements SensorListener

{

private Bitmap mBitmap;

private Paint mPaint = new Paint();

private Canvas mCanvas = new Canvas();

private Path mPath = new Path();

private RectF mRect = new RectF();

private float mLastValues[] = new float[3*2];

private float mOrientationValues[] = new float[3];

private int mColors[] = new int[3*2];

private float mLastX;

private float mScale[] = new float[2];

private float mYOffset;

private float mMaxX;

private float mSpeed = 1.0f;

private float mWidth;

private float mHeight;

public GraphView(Context context) {

super(context);

mColors[0] = Color.argb(192, 255, 64, 64);

mColors[1] = Color.argb(192, 64, 128, 64);

mColors[2] = Color.argb(192, 64, 64, 255);

mColors[3] = Color.argb(192, 64, 255, 255);

mColors[4] = Color.argb(192, 128, 64, 128);

mColors[5] = Color.argb(192, 255, 255, 64);

mPaint.setFlags(Paint.ANTI_ALIAS_FLAG);

mRect.set(-0.5f, -0.5f, 0.5f, 0.5f);

mPath.arcTo(mRect, 0, 180);

}

@Override

protected void onSizeChanged(int w, int h, int oldw, int oldh) {

mBitmap = Bitmap.createBitmap(w, h, Bitmap.Config.RGB_565);

mCanvas.setBitmap(mBitmap);

mCanvas.drawColor(0xFFFFFFFF);

mYOffset = h * 0.5f;

mScale[0] = - (h * 0.5f * (1.0f / (SensorManager.STANDARD_GRAVITY * 2)));

mScale[1] = - (h * 0.5f * (1.0f / (SensorManager.MAGNETIC_FIELD_EARTH_MAX)));

mWidth = w;

mHeight = h;

if (mWidth < mHeight) {

mMaxX = w;

} else {

mMaxX = w-50;

}

mLastX = mMaxX;

super.onSizeChanged(w, h, oldw, oldh);

}

@Override

protected void onDraw(Canvas canvas) {

synchronized (this) {

if (mBitmap != null) {

final Paint paint = mPaint;

final Path path = mPath;

final int outer = 0xFFC0C0C0;

final int inner = 0xFFff7010;

if (mLastX >= mMaxX) {

mLastX = 0;

final Canvas cavas = mCanvas;

final float yoffset = mYOffset;

final float maxx = mMaxX;

final float oneG = SensorManager.STANDARD_GRAVITY * mScale[0];

paint.setColor(0xFFAAAAAA);

cavas.drawColor(0xFFFFFFFF);

cavas.drawLine(0, yoffset, maxx, yoffset, paint);

cavas.drawLine(0, yoffset+oneG, maxx, yoffset+oneG, paint);

cavas.drawLine(0, yoffset-oneG, maxx, yoffset-oneG, paint);

}

canvas.drawBitmap(mBitmap, 0, 0, null);

float[] values = mOrientationValues;

if (mWidth < mHeight) {

float w0 = mWidth * 0.333333f;

float w = w0 - 32;

float x = w0*0.5f;

for (int i=0 ; i<3 ; i++) {

canvas.save(Canvas.MATRIX_SAVE_FLAG);

canvas.translate(x, w*0.5f + 4.0f);

canvas.save(Canvas.MATRIX_SAVE_FLAG);

paint.setColor(outer);

canvas.scale(w, w);

canvas.drawOval(mRect, paint);

canvas.restore();

canvas.scale(w-5, w-5);

paint.setColor(inner);

canvas.rotate(-values[i]);

canvas.drawPath(path, paint);

canvas.restore();

x += w0;

}

} else {

float h0 = mHeight * 0.333333f;

float h = h0 - 32;

float y = h0*0.5f;

for (int i=0 ; i<3 ; i++) {

canvas.save(Canvas.MATRIX_SAVE_FLAG);

canvas.translate(mWidth - (h*0.5f + 4.0f), y);

canvas.save(Canvas.MATRIX_SAVE_FLAG);

paint.setColor(outer);

canvas.scale(h, h);

canvas.drawOval(mRect, paint);

canvas.restore();

canvas.scale(h-5, h-5);

paint.setColor(inner);

canvas.rotate(-values[i]);

canvas.drawPath(path, paint);

canvas.restore();

y += h0;

}

}

}

}

}

public void onSensorChanged(int sensor, float[] values) {

//Log.d(TAG, "sensor: " + sensor + ", x: " + values[0] + ", y: " + values[1] + ", z: " + values[2]);

synchronized (this) {

if (mBitmap != null) {

final Canvas canvas = mCanvas;

final Paint paint = mPaint;

if (sensor == SensorManager.SENSOR_ORIENTATION) {

for (int i=0 ; i<3 ; i++) {

mOrientationValues[i] = values[i];

}

} else {

float deltaX = mSpeed;

float newX = mLastX + deltaX;

int j = (sensor == SensorManager.SENSOR_MAGNETIC_FIELD) ? 1 : 0;

for (int i=0 ; i<3 ; i++) {

int k = i+j*3;

final float v = mYOffset + values[i] * mScale[j];

paint.setColor(mColors[k]);

canvas.drawLine(mLastX, mLastValues[k], newX, v, paint);

mLastValues[k] = v;

}

if (sensor == SensorManager.SENSOR_MAGNETIC_FIELD)

mLastX += mSpeed;

}

invalidate();

}

}

}

public void onAccuracyChanged(int sensor, int accuracy) {

// TODO Auto-generated method stub

}

}

/**

* Initialization of the Activity after it is first created. Must at least

* call {@link android.app.Activity#setContentView setContentView()} to

* describe what is to be displayed in the screen.

*/

@Override

protected void onCreate(Bundle savedInstanceState) {

// Be sure to call the super class.

super.onCreate(savedInstanceState);

mSensorManager = (SensorManager) getSystemService(SENSOR_SERVICE);

mGraphView = new GraphView(this);

setContentView(mGraphView);

}

@Override

protected void onResume() {

super.onResume();

mSensorManager.registerListener(mGraphView,

SensorManager.SENSOR_ACCELEROMETER |

SensorManager.SENSOR_MAGNETIC_FIELD |

SensorManager.SENSOR_ORIENTATION,

SensorManager.SENSOR_DELAY_FASTEST);

}

@Override

protected void onStop() {

mSensorManager.unregisterListener(mGraphView);

super.onStop();

}

}

3. 把感測器模擬程式內sensorsimulator-1.0.0-beta1\samples\SensorDemo目錄下的lib目錄複製到sensors專案下

4. 在AndroidManifest檔案中增加Internet的選項,因為感測器的模擬軟體是用TCP/IP來和應用程式溝通。(粗體字)

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.example.sensor"

android:versionCode="1"

android:versionName="1.0">

<application android:icon="@drawable/icon" android:label="@string/app_name">

<activity android:name=".Sensors"

android:label="@string/app_name">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

<uses-sdk android:minSdkVersion="2" />

<uses-permission android:name="android.permission.INTERNET"></uses-permission></manifest>

5. 增加感測器模擬程式的控制程式(粗體字)

package com.example.sensor;

import org.openintents.sensorsimulator.hardware.SensorManagerSimulator;import android.app.Activity;

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.Canvas;

import android.graphics.Color;

import android.graphics.Paint;

import android.graphics.Path;

import android.graphics.RectF;

import android.hardware.SensorListener;

import android.hardware.SensorManager;

import android.os.Bundle;

import android.view.View;

public class Sensors extends Activity {

/** Called when the activity is first created. */

/** Tag string for our debug logs */

private static final String TAG = "Sensors";

// private SensorManager mSensorManager;

private SensorManagerSimulator mSensorManager; private GraphView mGraphView;

private class GraphView extends View implements SensorListener

{

private Bitmap mBitmap;

private Paint mPaint = new Paint();

private Canvas mCanvas = new Canvas();

private Path mPath = new Path();

private RectF mRect = new RectF();

private float mLastValues[] = new float[3*2];

private float mOrientationValues[] = new float[3];

private int mColors[] = new int[3*2];

private float mLastX;

private float mScale[] = new float[2];

private float mYOffset;

private float mMaxX;

private float mSpeed = 1.0f;

private float mWidth;

private float mHeight;

public GraphView(Context context) {

super(context);

mColors[0] = Color.argb(192, 255, 64, 64);

mColors[1] = Color.argb(192, 64, 128, 64);

mColors[2] = Color.argb(192, 64, 64, 255);

mColors[3] = Color.argb(192, 64, 255, 255);

mColors[4] = Color.argb(192, 128, 64, 128);

mColors[5] = Color.argb(192, 255, 255, 64);

mPaint.setFlags(Paint.ANTI_ALIAS_FLAG);

mRect.set(-0.5f, -0.5f, 0.5f, 0.5f);

mPath.arcTo(mRect, 0, 180);

}

@Override

protected void onSizeChanged(int w, int h, int oldw, int oldh) {

mBitmap = Bitmap.createBitmap(w, h, Bitmap.Config.RGB_565);

mCanvas.setBitmap(mBitmap);

mCanvas.drawColor(0xFFFFFFFF);

mYOffset = h * 0.5f;

mScale[0] = - (h * 0.5f * (1.0f / (SensorManager.STANDARD_GRAVITY * 2)));

mScale[1] = - (h * 0.5f * (1.0f / (SensorManager.MAGNETIC_FIELD_EARTH_MAX)));

mWidth = w;

mHeight = h;

if (mWidth < mHeight) {

mMaxX = w;

} else {

mMaxX = w-50;

}

mLastX = mMaxX;

super.onSizeChanged(w, h, oldw, oldh);

}

@Override

protected void onDraw(Canvas canvas) {

synchronized (this) {

if (mBitmap != null) {

final Paint paint = mPaint;

final Path path = mPath;

final int outer = 0xFFC0C0C0;

final int inner = 0xFFff7010;

if (mLastX >= mMaxX) {

mLastX = 0;

final Canvas cavas = mCanvas;

final float yoffset = mYOffset;

final float maxx = mMaxX;

final float oneG = SensorManager.STANDARD_GRAVITY * mScale[0];

paint.setColor(0xFFAAAAAA);

cavas.drawColor(0xFFFFFFFF);

cavas.drawLine(0, yoffset, maxx, yoffset, paint);

cavas.drawLine(0, yoffset+oneG, maxx, yoffset+oneG, paint);

cavas.drawLine(0, yoffset-oneG, maxx, yoffset-oneG, paint);

}

canvas.drawBitmap(mBitmap, 0, 0, null);

float[] values = mOrientationValues;

if (mWidth < mHeight) {

float w0 = mWidth * 0.333333f;

float w = w0 - 32;

float x = w0*0.5f;

for (int i=0 ; i<3 ; i++) {

canvas.save(Canvas.MATRIX_SAVE_FLAG);

canvas.translate(x, w*0.5f + 4.0f);

canvas.save(Canvas.MATRIX_SAVE_FLAG);

paint.setColor(outer);

canvas.scale(w, w);

canvas.drawOval(mRect, paint);

canvas.restore();

canvas.scale(w-5, w-5);

paint.setColor(inner);

canvas.rotate(-values[i]);

canvas.drawPath(path, paint);

canvas.restore();

x += w0;

}

} else {

float h0 = mHeight * 0.333333f;

float h = h0 - 32;

float y = h0*0.5f;

for (int i=0 ; i<3 ; i++) {

canvas.save(Canvas.MATRIX_SAVE_FLAG);

canvas.translate(mWidth - (h*0.5f + 4.0f), y);

canvas.save(Canvas.MATRIX_SAVE_FLAG);

paint.setColor(outer);

canvas.scale(h, h);

canvas.drawOval(mRect, paint);

canvas.restore();

canvas.scale(h-5, h-5);

paint.setColor(inner);

canvas.rotate(-values[i]);

canvas.drawPath(path, paint);

canvas.restore();

y += h0;

}

}

}

}

}

public void onSensorChanged(int sensor, float[] values) {

//Log.d(TAG, "sensor: " + sensor + ", x: " + values[0] + ", y: " + values[1] + ", z: " + values[2]);

synchronized (this) {

if (mBitmap != null) {

final Canvas canvas = mCanvas;

final Paint paint = mPaint;

if (sensor == SensorManager.SENSOR_ORIENTATION) {

for (int i=0 ; i<3 ; i++) {

mOrientationValues[i] = values[i];

}

} else {

float deltaX = mSpeed;

float newX = mLastX + deltaX;

int j = (sensor == SensorManager.SENSOR_MAGNETIC_FIELD) ? 1 : 0;

for (int i=0 ; i<3 ; i++) {

int k = i+j*3;

final float v = mYOffset + values[i] * mScale[j];

paint.setColor(mColors[k]);

canvas.drawLine(mLastX, mLastValues[k], newX, v, paint);

mLastValues[k] = v;

}

if (sensor == SensorManager.SENSOR_MAGNETIC_FIELD)

mLastX += mSpeed;

}

invalidate();

}

}

}

public void onAccuracyChanged(int sensor, int accuracy) {

// TODO Auto-generated method stub

}

}

/**

* Initialization of the Activity after it is first created. Must at least

* call {@link android.app.Activity#setContentView setContentView()} to

* describe what is to be displayed in the screen.

*/

@Override

protected void onCreate(Bundle savedInstanceState) {

// Be sure to call the super class.

super.onCreate(savedInstanceState);

// mSensorManager = (SensorManager) getSystemService(SENSOR_SERVICE);

mSensorManager = SensorManagerSimulator.getSystemService(this, SENSOR_SERVICE);

mSensorManager.connectSimulator(); mGraphView = new GraphView(this);

setContentView(mGraphView);

}

@Override

protected void onResume() {

super.onResume();

mSensorManager.registerListener(mGraphView,

SensorManager.SENSOR_ACCELEROMETER |

SensorManager.SENSOR_MAGNETIC_FIELD |

SensorManager.SENSOR_ORIENTATION,

SensorManager.SENSOR_DELAY_FASTEST);

}

@Override

protected void onStop() {

mSensorManager.unregisterListener(mGraphView);

super.onStop();

}

}

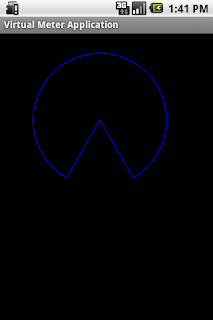

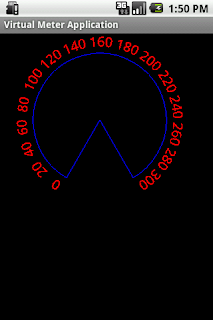

6. 在模擬器上執行模擬感測器的設定程式,進行IP設定。

7. 執行連線並勾選感測器

8. 最後執行調整感測器模擬程式觀察感測器應用程式的變化

今天測試Android Sensor Simultor軟體,感覺很好玩。它使用一個替代的方法,其程式如下所示:

今天測試Android Sensor Simultor軟體,感覺很好玩。它使用一個替代的方法,其程式如下所示: